Brought to you by:

Enterprise Strategy Group | Getting to the Bigger Truth™

TECHNICAL VALIDATION

Gigamon Hawk Deep Observability Pipeline

Establishing Comprehensive Visibility And Security In Hybrid Cloud And Multi-Cloud Environments

By Alex Arcilla, Senior Validation Analyst

JULY 2022

Introduction

Background

Figure 1. Top 5 Perceptions about the State of Monitoring/Observability Tools in Organizations

Please rate your level of agreement with the following statements related to the application and infrastructure monitoring/observability environment at your organization. (Percent of respondents, N=357)

Source: ESG, a division of TechTarget, Inc.

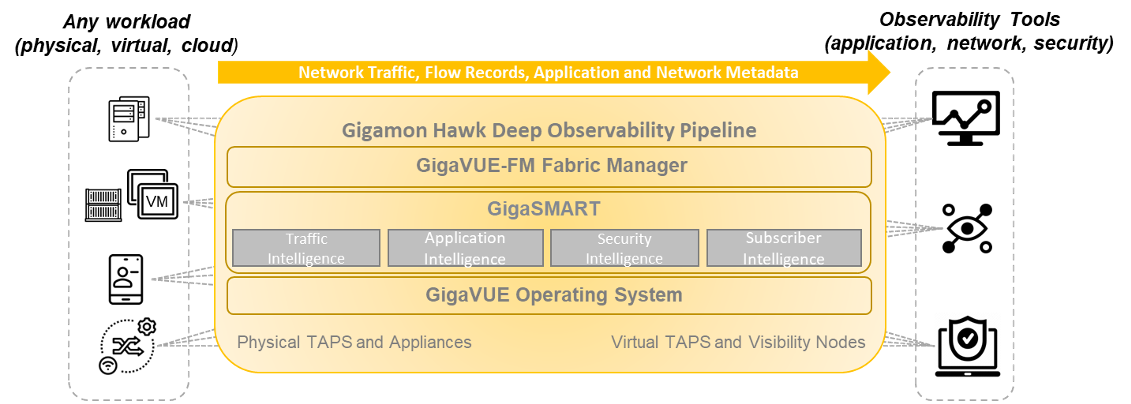

Gigamon Hawk Deep Observability Pipeline

Figure 2. Gigamon Hawk Deep Observability Pipeline

Source: ESG, a division of TechTarget, Inc.

ESG Technical Validation

Security Effectiveness

ESG Testing

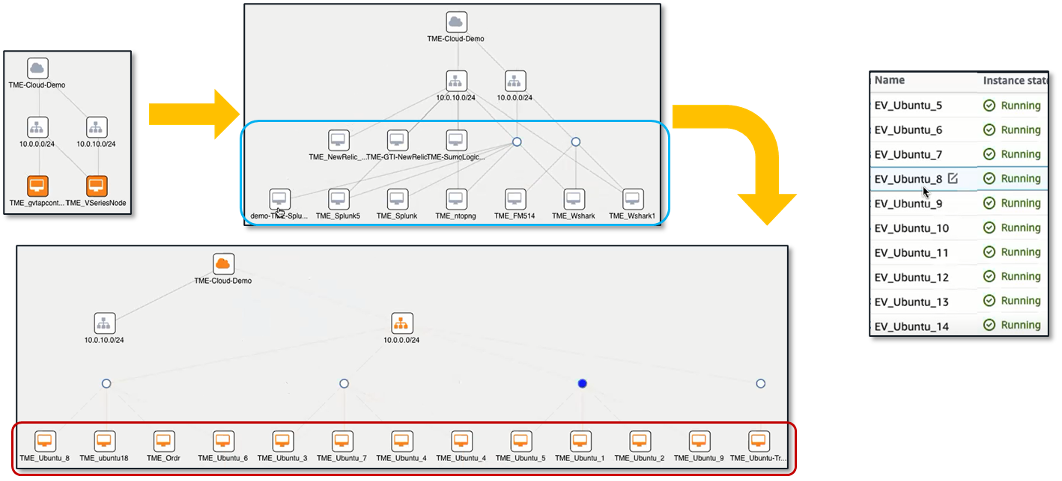

Figure 3. Collapsed and Expanded Views of “TME_AWS” Monitoring Domain

Source: ESG, a division of TechTarget, Inc.

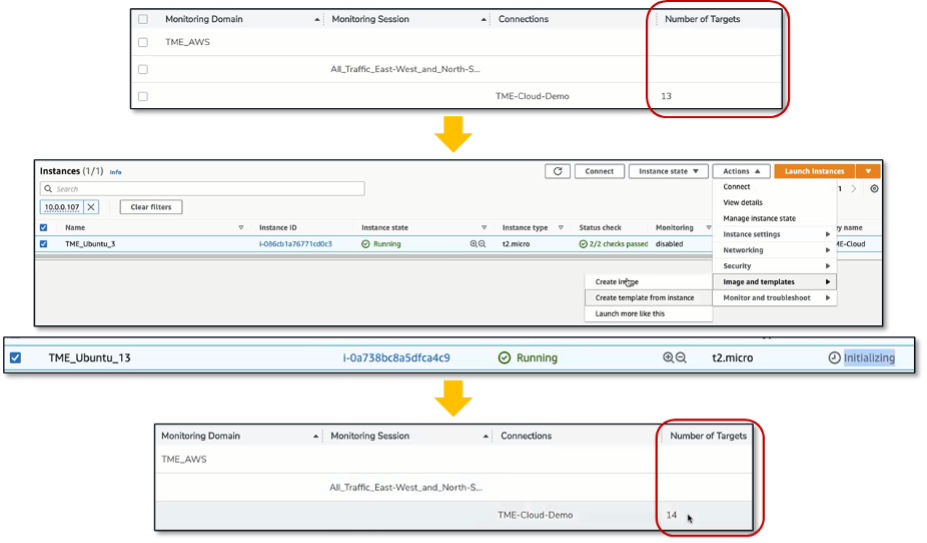

Figure 4. Enabling East-west Traffic Visibility between New and Running AWS EC2 Instances

Source: ESG, a division of TechTarget, Inc.

Why This Matters

Bolstering Security Posture

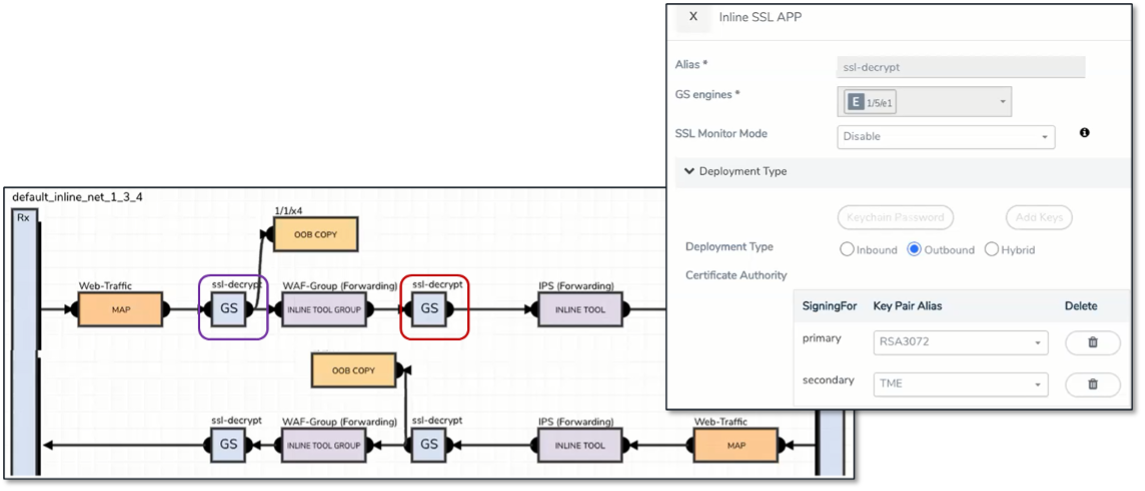

ESG Testing

Figure 5. Leveraging GigaSMART Decryption Capabilities in On-premises Environments

Source: ESG, a division of TechTarget, Inc.

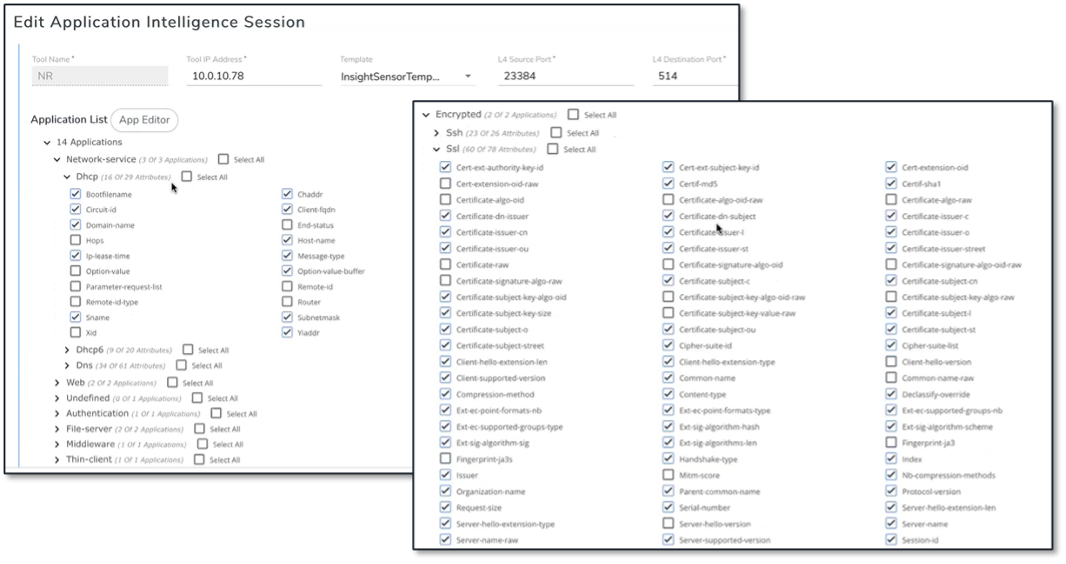

Figure 6. Selecting Criteria for Filtering Network Traffic Based on Application-related Attributes

Source: ESG, a division of TechTarget, Inc.

Figure 7. Intelligence Displayed in New Relic and Datadog

Source: ESG, a division of TechTarget, Inc.

Why This Matters

Preserving Investment in Existing Toolsets

ESG Testing

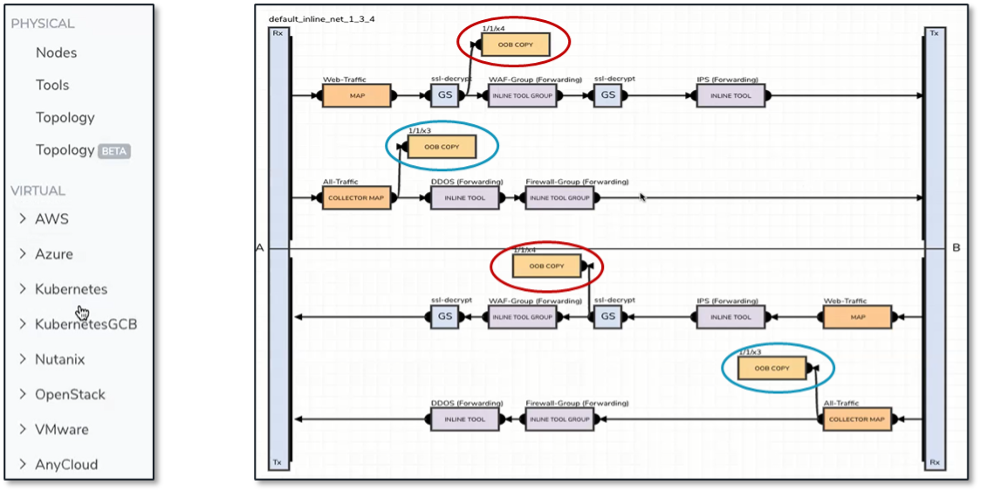

Figure 8. Inventory and On-premises Traffic Collection/Distribution Views

Source: ESG, a division of TechTarget, Inc.

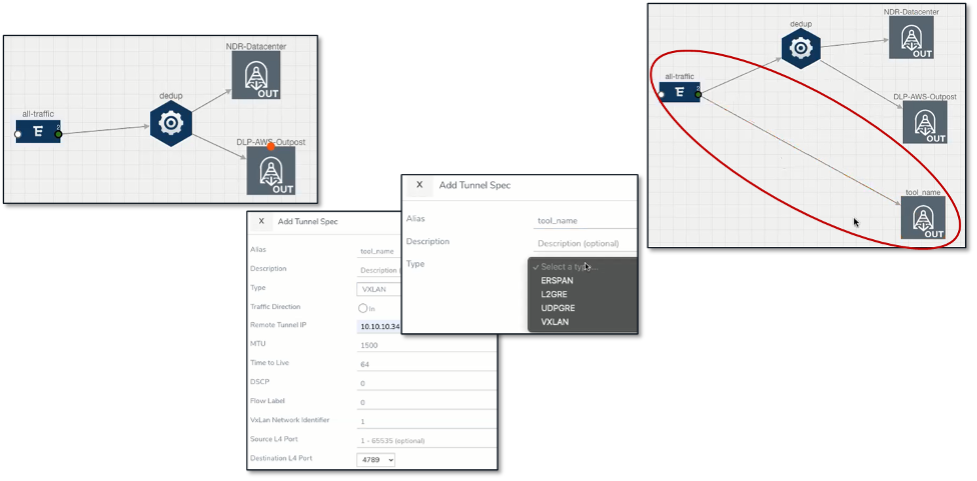

Figure 9. Inventory and Traffic Collection/Distribution Views

Source: ESG, a division of TechTarget, Inc.

Why This Matters

The Bigger Truth

- Achieve hybrid and multi-cloud visibility for north-south and east-west traffic, without the need to switch between CSP-specific tools.

- Maintain security with existing tools without degrading network performance by offloading traffic decryption and inspection functions, thus decreasing time to identification and remediation of potential threats and attacks.

- Establish comprehensive visibility without the need to rip and replace existing monitoring tools, as organizations can share traffic data amongst multiple tools efficiently

This ESG Technical Validation was commissioned by Gigamon and is distributed under license from TechTarget, Inc.

All product names, logos, brands, and trademarks are the property of their respective owners. Information contained in this publication has been obtained by sources TechTarget, Inc. considers to be reliable but is not warranted by TechTarget, Inc. This publication may contain opinions of TechTarget, Inc., which are subject to change. This publication may include forecasts, projections, and other predictive statements that represent TechTarget, Inc.’s assumptions and expectations in light of currently available information. These forecasts are based on industry trends and involve variables and uncertainties. Consequently, TechTarget, Inc. makes no warranty as to the accuracy of specific forecasts, projections or predictive statements contained herein.

This publication is copyrighted by TechTarget, Inc. Any reproduction or redistribution of this publication, in whole or in part, whether in hard-copy format, electronically, or otherwise to persons not authorized to receive it, without the express consent of TechTarget, Inc., is in violation of U.S. copyright law and will be subject to an action for civil damages and, if applicable, criminal prosecution. Should you have any questions, please contact Client Relations at cr@esg-global.com.

The goal of ESG Validation reports is to educate IT professionals about information technology solutions for companies of all types and sizes. ESG Validation reports are not meant to replace the evaluation process that should be conducted before making purchasing decisions, but rather to provide insight into these emerging technologies. Our objectives are to explore some of the more valuable features and functions of IT solutions, show how they can be used to solve real customer problems, and identify any areas needing improvement. The ESG Validation Team’s expert third-party perspective is based on our own hands-on testing as well as on interviews with customers who use these products in production environments.

Enterprise Strategy Group | Getting to the Bigger Truth™

Enterprise Strategy Group is an IT analyst, research, validation, and strategy firm that provides market intelligence and actionable insight to the global IT community.