Brought to you by:

Enterprise Strategy Group | Getting to the Bigger Truth™

ESG TECHNICAL VALIDATION

Liqid Composable Disaggregated Infrastructure

Configuring Servers On-demand in the On-premises Data Center

By Alex Arcilla, Senior Validation Analyst

APRIL 2021

ESG Technical Validations

Introduction

Background

Figure 1. Top Six Areas of Data Center Modernization for Investments in the Next 12-18 Months

In which of the following areas of data center modernization will your organization make the most significant investments over the next 12-18 months? (Percent of respondents, N=664, five responses accepted)

Source: Enterprise Strategy Group

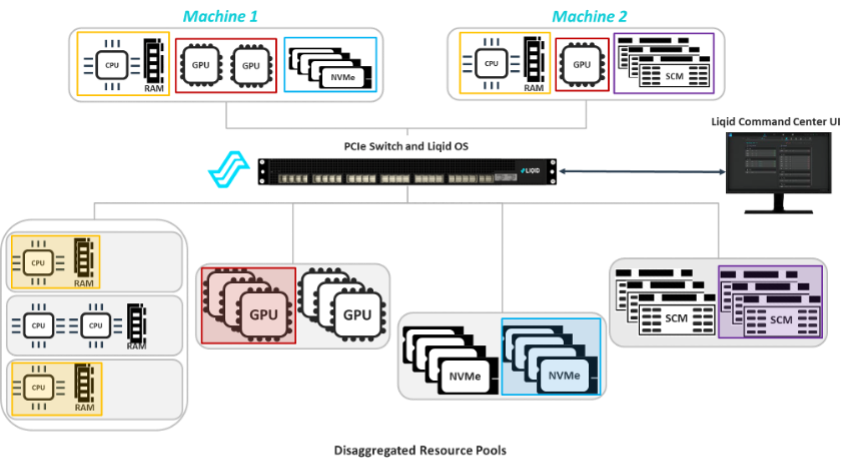

Liqid Composable Disaggregated Infrastructure

Figure 2. Liqid Composable Disaggregated Infrastructure

Source: Enterprise Strategy Group

ESG Technical Validation

Figure 3. Liqid Test Bed for Composing a New Server

Source: Enterprise Strategy Group

Accelerated Time to Value

ESG Testing

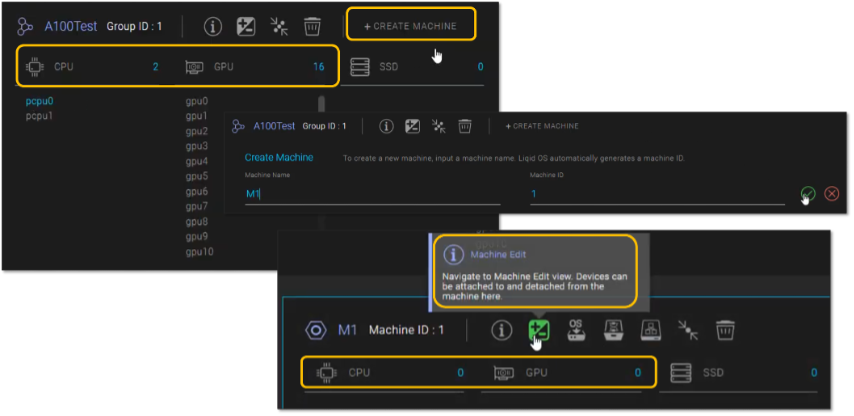

Figure 4. Creating a New Machine “M1”

Source: Enterprise Strategy Group

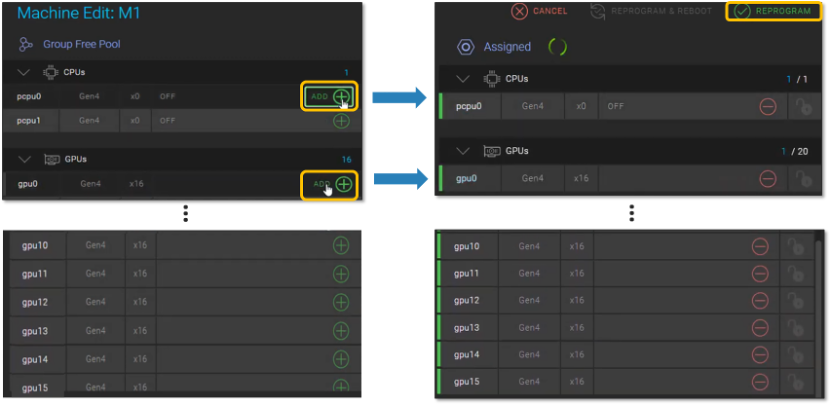

Figure 5. Adding Compute and GPU Resources to Machine M1

Source: Enterprise Strategy Group

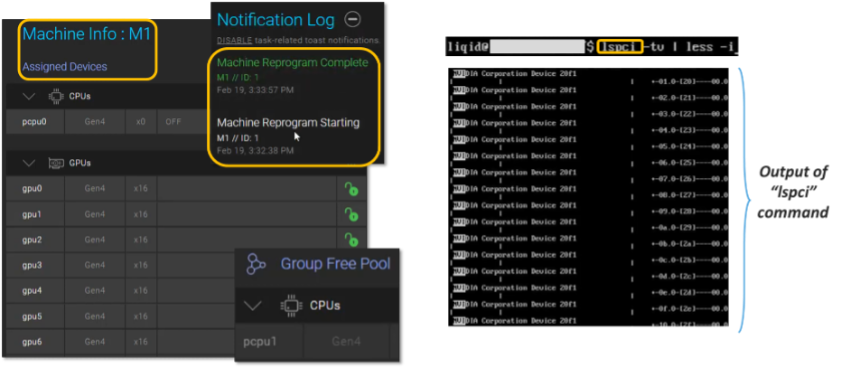

Figure 6. Verifying that Available GPUs are Connected to “M1” Machine

Source: Enterprise Strategy Group

Why This Matters

Increased Data Center Resource Efficiency

ESG Testing

Figure 7. Modified Test Bed with Dell EMC Servers

Source: Enterprise Strategy Group

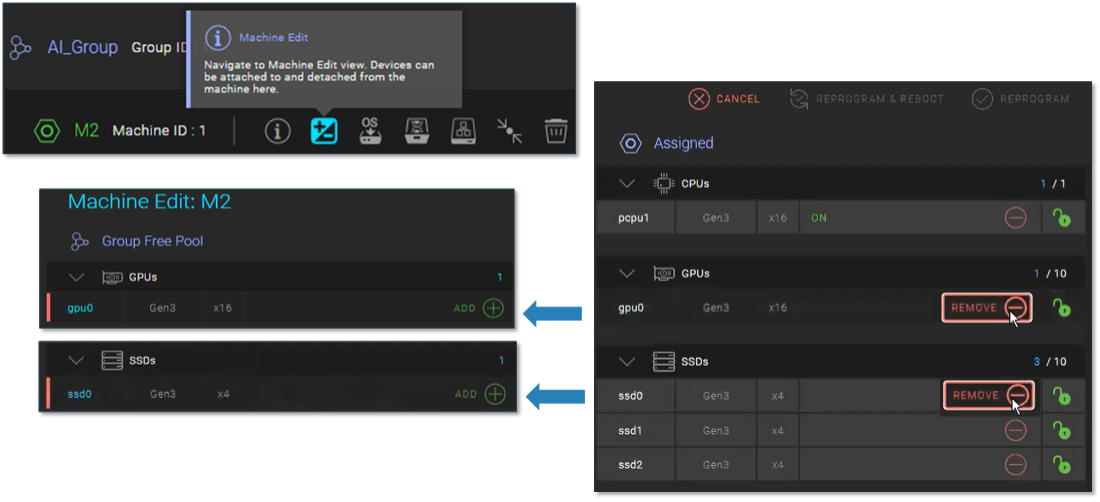

Figure 8. Removing Excess GPU Compute and Storage Capacity from “M2” Machine

Source: Enterprise Strategy Group

Why This Matters

Improved IT Agility

ESG Testing

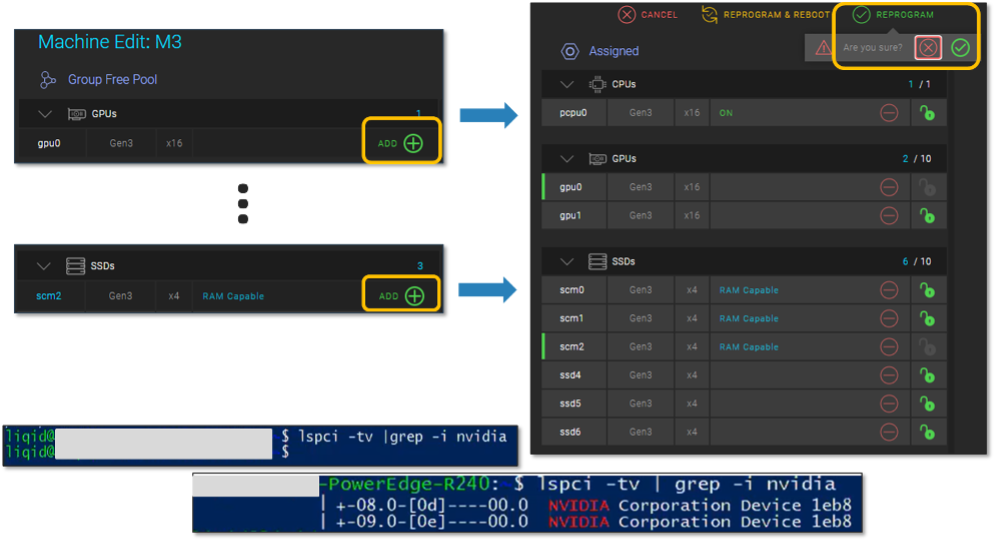

Figure 9. Scaling Up GPU Compute and Storage Capacity on Existing “M3” Machine

Source: Enterprise Strategy Group

Why This Matters

The Bigger Truth

This ESG Technical Validation was commissioned by Liqid and is distributed under license from ESG.

1 A hyperscaler cloud solution is a hardware and software stack offered by a cloud service provider (CSP), such as Amazon Web Services (AWS) Outposts, that enables businesses to deploy and run cloud-like infrastructure (such as compute instances) spanning on-premises data centers and public clouds using a common set of tools and operating procedures.

2 Source: ESG Master Survey Results, 2021 Technology Spending Intentions Survey, Dec 2020.

3 CPUs and RAM are not disaggregated in Liqid CDI. Servers already contain a defined amount of CPU and RAM capacity.

4 With Intel Optane technology, SCM components can extend system memory.

5 Liqid CDI also currently supports InfiniBand switch fabrics.

6 A group enables an IT administrator to allocate specific resources to a user or team. The group can be restricted with Liqid's role-based access control (RBAC) functionality.

7 “lspci” is a command on Unix-like operating systems that lists detailed information about all PCI buses and devices in a given system.

All trademark names are property of their respective companies. Information contained in this publication has been obtained by sources The Enterprise Strategy Group (ESG) considers to be reliable but is not warranted by ESG. This publication may contain opinions of ESG, which are subject to change from time to time. This publication is copyrighted by The Enterprise Strategy Group, Inc. Any reproduction or redistribution of this publication, in whole or in part, whether in hard-copy format, electronically, or otherwise to persons not authorized to receive it, without the express consent of The Enterprise Strategy Group, Inc., is in violation of U.S. copyright law and will be subject to an action for civil damages and, if applicable, criminal prosecution. Should you have any questions, please contact ESG Client Relations at 508.482.0188.

Enterprise Strategy Group | Getting to the Bigger Truth™

Enterprise Strategy Group is an IT analyst, research, validation, and strategy firm that provides market intelligence and actionable insight to the global IT community.